Teaching

CS 6756: Learning for Robot Decision Making

https://www.cs.cornell.edu/courses/cs6756/2023fa/

https://www.cs.cornell.edu/courses/cs6756/2022fa/

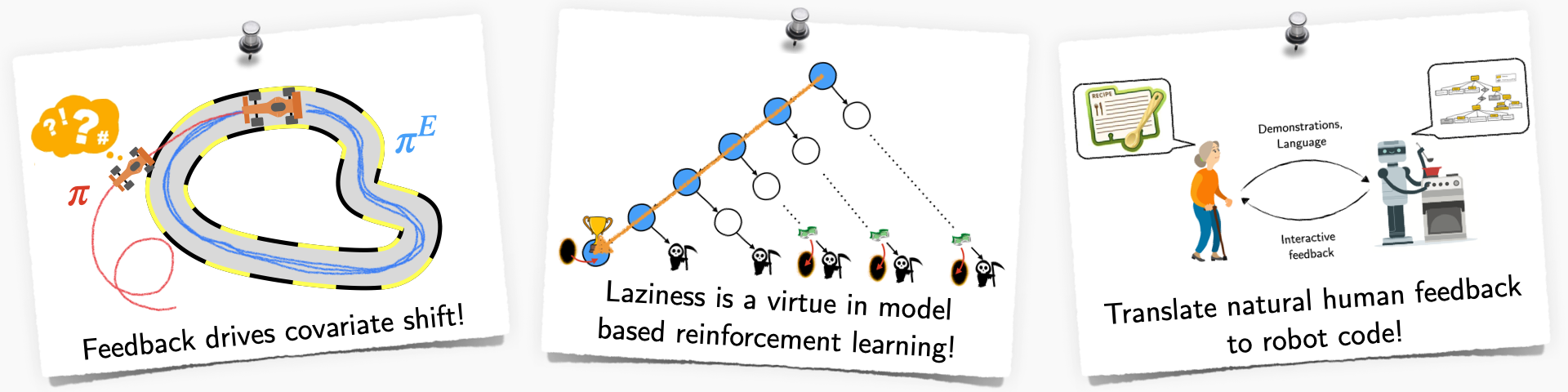

Machine learning has made significant advances in many AI applications from language (e.g. ChatGPT) to vision (e.g. Diffusion models). However, it has fallen short when it comes to making decisions, especially for robots interacting within the physical world. Robot decision making presents a unique set of challenges - complexities of the real world, limited labelled data, hard physics constraints, safety aspects when interacting with humans, and more. This graduate-level course dives deep into these issues, beginning with the basics and traversing through the frontiers of robot learning. We look at:

- Planning in continuous state-action spaces over long-horizons with hard physical constraints.

- Imitation learning from various modes of interaction (demonstrations, interventions) as a unified, game-theoretic framework.

- Practical reinforcement learning that leverages both model predictive control and model-free methods.

- Frontiers such as offline reinforcement learning, LLMs, diffusion policies and causal confounds.

CS 4756: Robot Learning

https://www.cs.cornell.edu/courses/cs4756/2024fa/

https://www.cs.cornell.edu/courses/cs4756/2024sp/

https://www.cs.cornell.edu/courses/cs4756/2023sp/

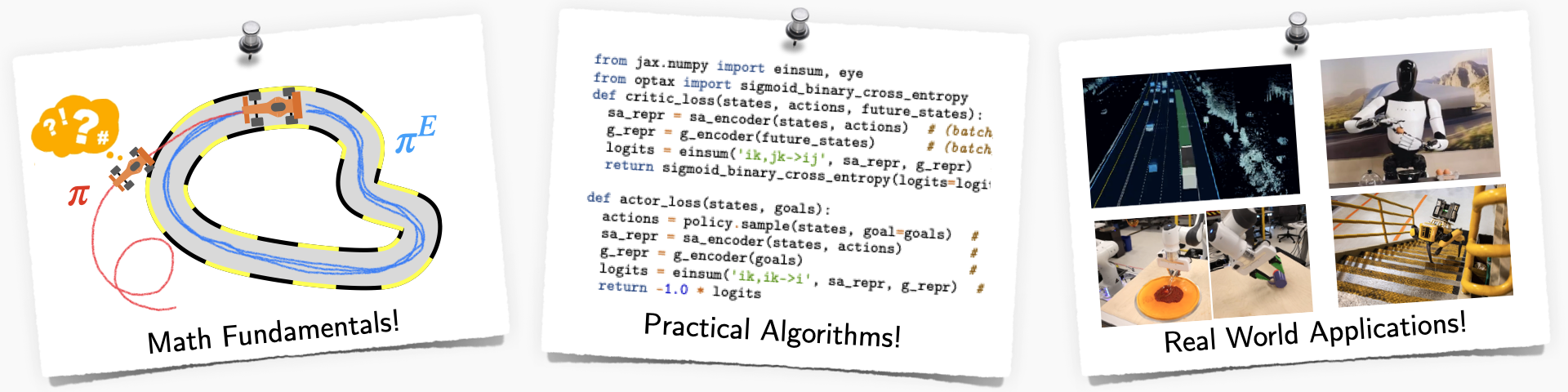

How do we get robots out of the labs and into the real world with all it’s complexities?

Robots must solve two fundamental problems – (1) Perception: Sense the world using different modalities and (2) Decision making: Act in the world by reasoning over decisions and their consequences. Machine learning promises to solve both problems in a scalable way using data. However, it has fallen short when it comes to robotics.

This course dives deep into robot learning, looks at fundamental algorithms and challenges, and case-studies of real-world applications from self-driving to manipulation. We look at:

- Learning perception models using probabilistic inference and 2D/3D deep learning.

- Imitation and interactive no-regret learning that handle distribution shifts, exploration/exploitation.

- Practical reinforcement learning leveraging both model predictive control and model-free methods.

- Open challenges in visuomotor skill learning, forecasting and offline reinforcement learning.

Imitation Learning: A Series of Deep Dives

In this 10-part series, we dive deep into imitation learning, and build up a general framework. A journey through feedback, interventions and more!

Core Concepts in Robotics

An introductory series that revisits core concepts in robotics in a contemporary light.

Interactive Online Learning: A Unified Algorithmic Framework

In this series, we try to understand the fundamental fabric that ties all of robot learning – “How can a robot learn from online interactions?” Our goal is to build up a unified mathematical framework to solve recurring problems in reinforcement learning, imitation learning, model predictive control, and planning.

CSE 490R: Mobile Robots (At UW)

https://courses.cs.washington.edu/courses/cse490r/19sp/

Mobile Robots delves into the building blocks of autonomous systems that operate in the wild. We will cover topics related to state estimation (bayes filtering, probabilistic motion and sensor models), control (feedback, Lyapunov, LQR, MPC), planning (roadmaps, heuristic search, incremental densification) and online learning. Students will be forming teams and implementing algorithms on 1/10th sized rally cars as part of their assignments. Concepts from all of the assignments will culminate into a final project with a demo on the rally cars. The course will involve programming in a Linux and Python environment along with ROS for interfacing to the robot.